Table of Contents

Recurrent Neural Networks

Notation

E.g. named entity recognition

9 Words ⇒ 9 Features

$x^{(i)<t>}; T_x^{(i)} = 9$ i-th training example, t-th element

$y^{(i)<t>}; T_y^{(i)}= 9$

Representation of words in a sentence:

Vocabulary / Dictionary vector (e.g. 10000)

One-hot representation for $x^{(i)<t>}$

Fake word in dictionary for unknown words

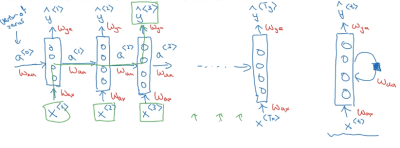

Recurrent Neural Network

Problems with simple input-output FCN model:

- Inputs, output can be different length in different examples

- Doesn't share features learned across different position of text

Problem with RNN: Only words before word is used for prediction (Solution Bidir. RNN)

$a^0=0$

$a^1=g(W_{aa} a^0 + W_{ax} x^1 + b_a)$ using tanh or ReLu as activation function

$\hat{y}^1=g(W_{ya} a^1 + b_y)$ using sigmoid as activation function

RNN types

Example so far: Many-to-many architecture $T_x = T_y$

Other types:

- Many-to-one: Sentinent classification: x = text, y = 1 or 0

- One-to-one: Like normal ANN

- One-to-many: Music generation. $\hat{y}$ is used as input for next time step

- Many-to-many: Machine translation (output length is different to input length). Encode takes input, Decoder generates outputs

- Attention-based

Language model

What is probability of sentence?

Training set: Large corpus of text in the language

- Tokenize sentence

- One hot encode

- EOS token

- UNK token (unknown word)

$x^t = y^{t-1}$

Given known words, what's the probability for next word?

Sampling a sequence

Sample from softmax distribution $\hat{y}$

Character level language model

Use chars instead of words.

But more computationally expensive.

Vanishing gradient

Problem: Not very good at capturing long-term dependencies (single/plural subjects and verbs). Stronger influenced by nearby variables.

Exploding gradients can happen, but easier to spot ⇒ NAN.

Solution: Gradient clipping (if threshold is reached, clip gradient).

Solution for vanishing gradient in following sections.

Gated Recurrent Unit

Improve capturing long-term dependencies and vanishing gradient problem.

Example sentence (with singular/plural dep): The cat, which already ate …, was full.

$c^{<t>}$ = memory cell

$c^{<t>}$ = $a^{<t>}$ for now

Candidate for replacing $c^{<t>}$ : $\tilde{c}^{<t>} = \tanh(W_c[\Gamma_r * c^{<t-1>}, x^{<t>}]+ b_c)$

Gate update $\Gamma_u=\sigma(W_u[c^{<t-1>}, x^{<t>}]+ b_u)$

Gate relevance $\Gamma_r=\sigma(W_r[c^{<t-1>}, x^{<t>}]+ b_r)$

$c^{<t>}$ = $\Gamma_u * \tilde{c}^{<t>} + (1-\Gamma_u) * c^{<t-1>}$

Gamma determines when Candidate is used

Update gate can be very close to 0, value remains nearly same

LSTM

$c^{<t>}$ != $a^{<t>}$

Candidate for replacing $c^{<t>}$ : $\tilde{c}^{<t>} = \tanh(W_c[a^{<t-1>}, x^{<t>}]+ b_c)$

Gate update $\Gamma_u=\sigma(W_u[a^{<t-1>}, x^{<t>}]+ b_u)$

Gate forget $\Gamma_f=\sigma(W_f[a^{<t-1>}, x^{<t>}]+ b_f)$

Gate output $\Gamma_o=\sigma(W_o[a^{<t-1>}, x^{<t>}]+ b_o)$

$c^{<t>}$ = $\Gamma_u * \tilde{c}^{<t>} + \Gamma_f * c^{<t-1>}$

(forget or update)

$a^{<t>}$ = $\Gamma_o * c^{<t>}$

Peephole connection: $c^{<t>}$ affects gate

Bidirektional RNN

Take info from sequence on the right

Going forward to last unit and back from there; Acyclic graph, two activations per step (forward, backward).

Activation blocks can be GRU or LSTM